Workpackages

Experiments at the PNI facilities, especially at third generation synchrotron sources like PETRA III, new high-flux neutron sources like the SNS and ESS, and free electron lasers, dramatically benefit from continuous improvements in source performance like brilliance or flux density, high throughput optics setups, and lately also from developments in detector technology. Especially, with the advent of modern 2D semiconductor detectors like CCDs, pnCCDs or pixel detectors capable of very high frame rates, totally new realms are opened up for high throughput experiments in various scientific fields. Better sampling in both space and time enable experiments of better spatial or q-space quality as well as temporal resolution. Common to all these experiments is the very high data rates that experimentalists have to cope with. Already now experiments can generate data rates up to the order of 10 MB/s for neutrons to several 100 MB/s for X-rays. Recently, the first FEL experiments have produced in excess of 20 TB within one week.

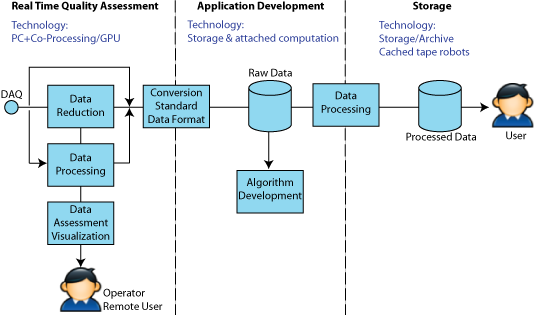

Simplified scheme of data flow at PNI facilities.

Even in such times of continuous growth of computing and data storage capabilities, these volumes of data require dedicated treatment, which extend to the duration of their whole lifetime. For a most efficient use of the experimental time at PNI facilities a fast first evaluation of the measured data is mandatory to guide the experiments. Depending on the experimental techniques this might be as simple as the determination of suitable quality indictors like the internal R-value in single crystal crystallography, the visualization of the data, or in slightly more complicated cases the inspection of some reconstructed information like in tomography. In complex experiments comparison with simulations or model calculations are often necessary. This first data reduction and evaluation step has to be fast enough to allow for an almost real time decision on whether an experiment was successful or not. It is this first step, which is one of the main aims of this collaborative initiative. However, additional efforts are necessary to make the measured data available to the users in an appropriate and safe way until the analysis is finished, and to archive and make data that resulted in publications publicly available. Since many of these tasks are similar at each of the PNI facilities, this common initiative will try to exploit the synergy of common developments where possible within PNI but also within the European and international synchrotron radiation, neutron, and ion beam communities. This includes facilities as well as user groups from universities and non-university institutions. To enable collaborations, the participating institutes have to agree on certain standards for storage of raw and meta data. The files should contain a full and self-consistent description of the data to allow for later evaluation. Standardized file formats are a precondition for exchanging data and analysis tools among institutes. Hardware standardization can help to keep the programming effort of specialized software at a sustainable level. Data modelling and analysis challenges presented by the large and complex datasets envisaged in this HDRI are formidable. In order to make the task of evaluating data at all manageable, and further to extend their use to the user community as a whole, it is vital that tools are developed which allow ready access to the visualizing of datasets, computationally intensive modelling of the physical behaviour of systems, and full simulations involving instrumental resolution.